Ansarada – AiDA simple survey

Gain insight into accuracy of Ansarada’s AI, AiDA responses.

Problem

We don’t currently know if AiDA is successfully answering questions in a way that is helpful and useful to people.

Intent

We need to assess the effectiveness of AiDA, by asking people how well their questions were answered.

Goal

We have a lot of assumptions as to how well AiDA is performing, but nothing concrete. So we intend to learn as we go and evolve our approach as we track and respond to feedback.

How do we plan to achieve this?

Design/introduce a simple flow for people that displays a survey once they have received an response from AiDA.

The flow should not be intrusive and must be easy and quick for the user to provide a response; we don’t want to interrupt or make more work.

We will assess the simple survey results weekly.

We will take a look through and synthesise all user responses using affinity diagramming techniques and 2×2 matrix or dot voting to help priorities action points.

The information will be used to provide solutions and recommendations for the team.

What we will do to achieve this

Look through existing AiDA metrics

Direct and indirect competitor research – how are other companies solving this problem?

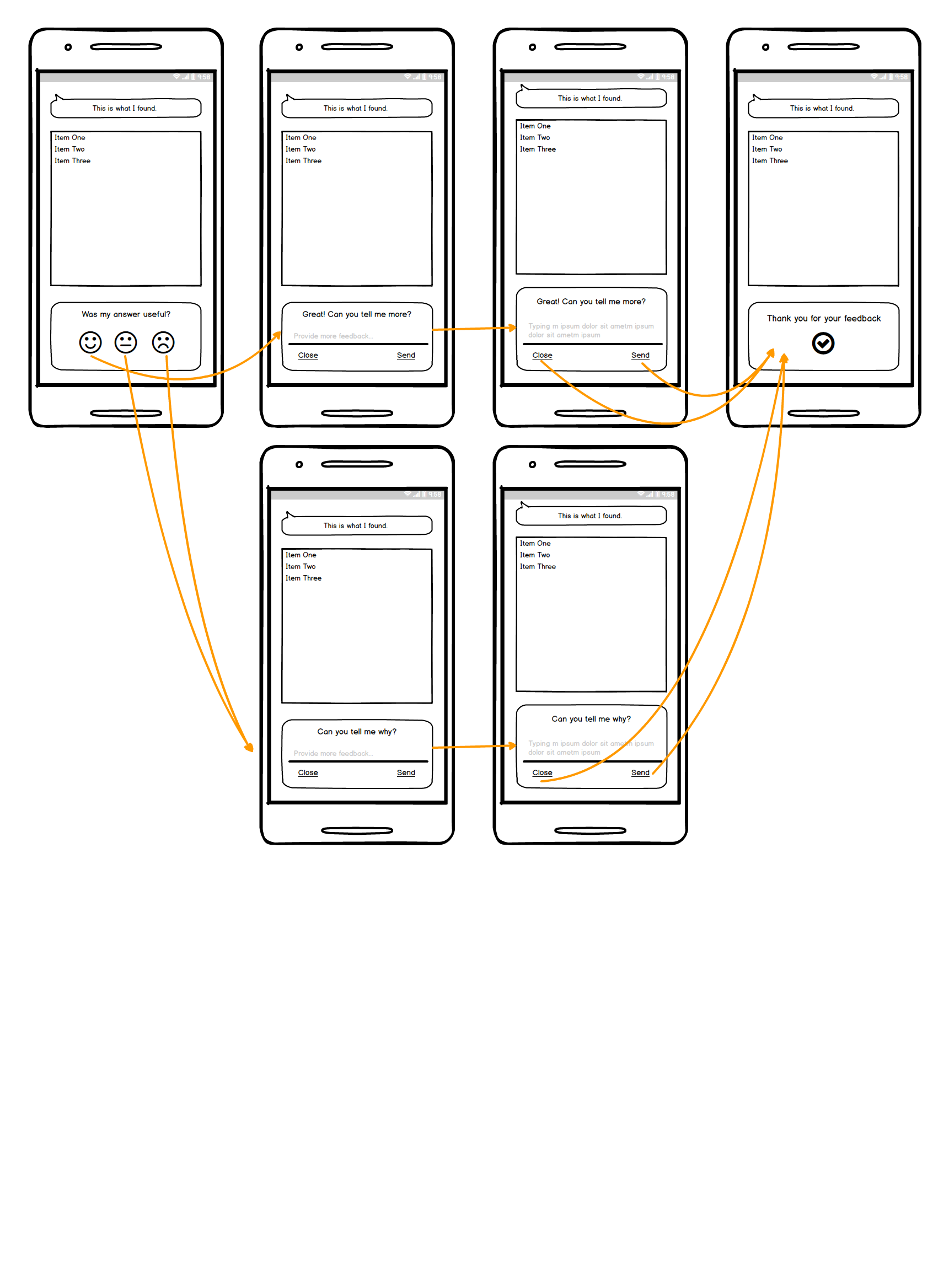

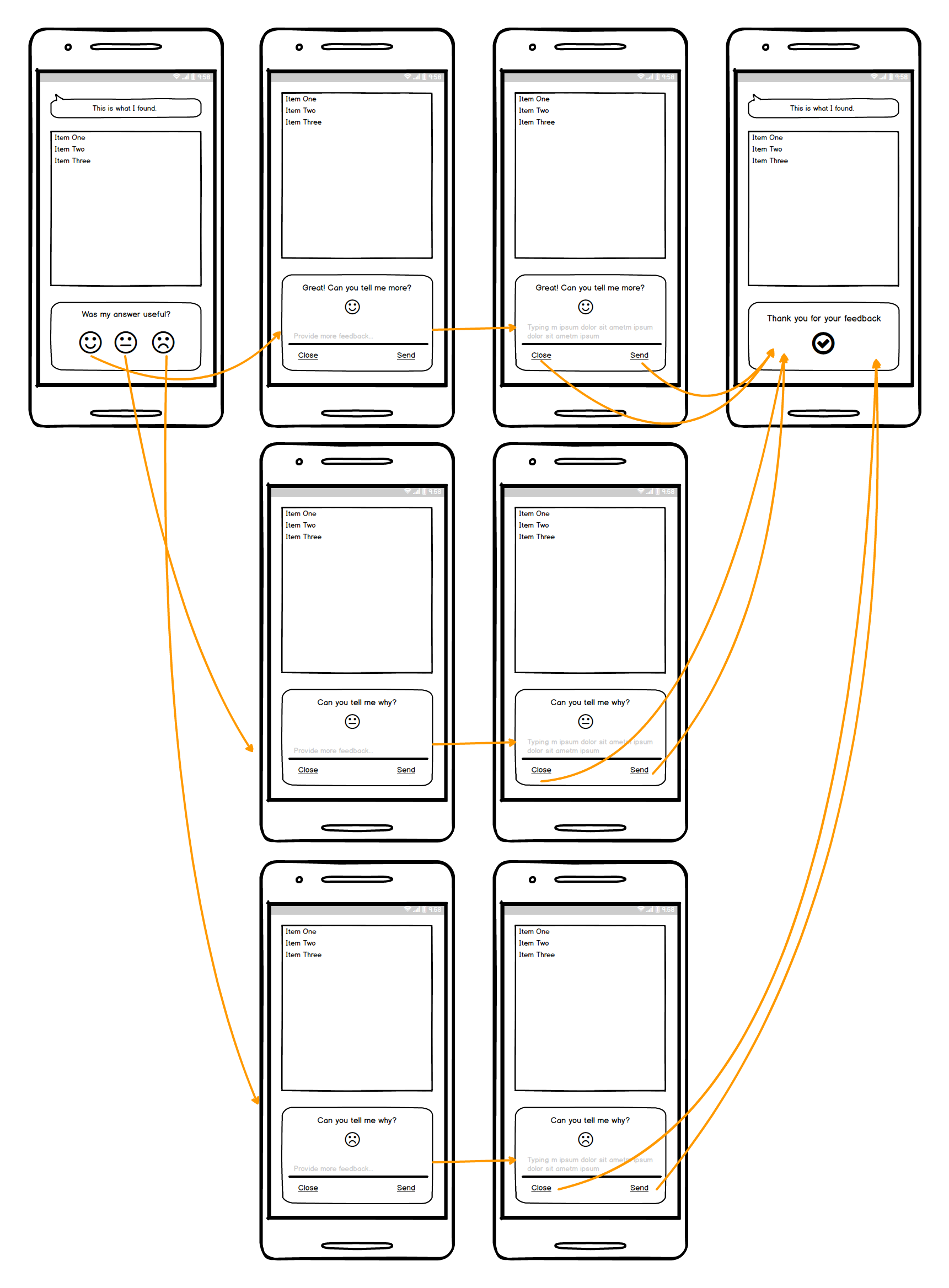

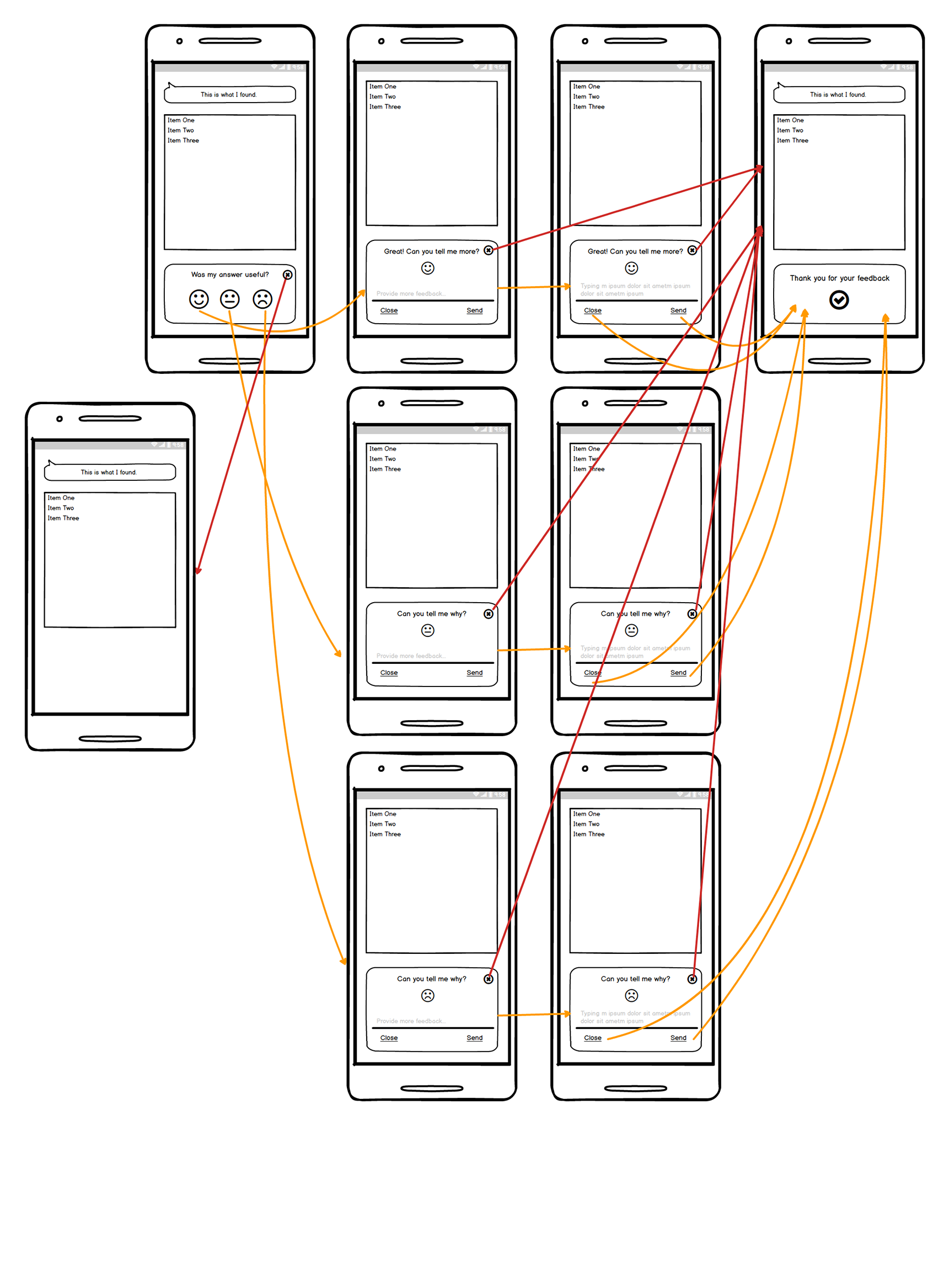

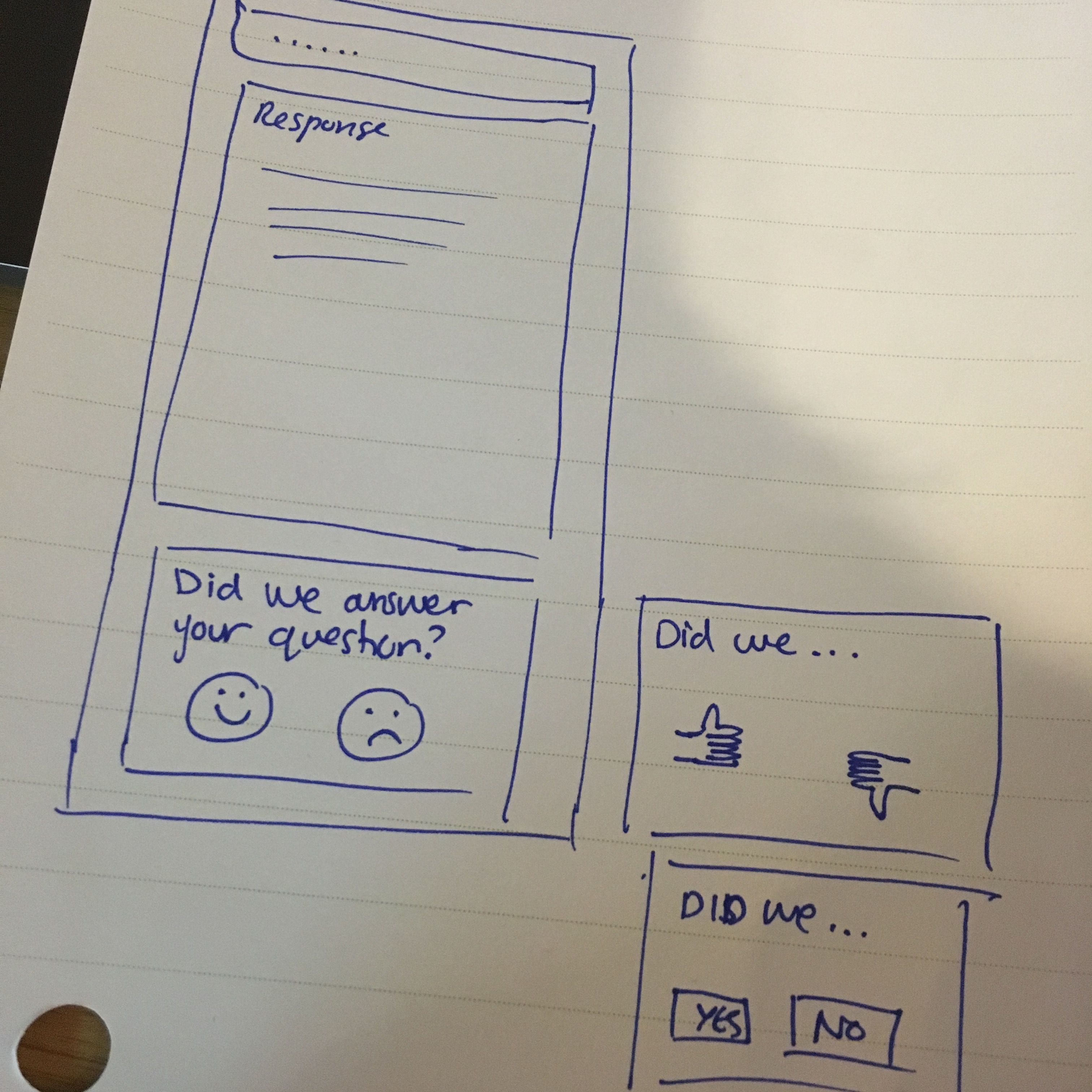

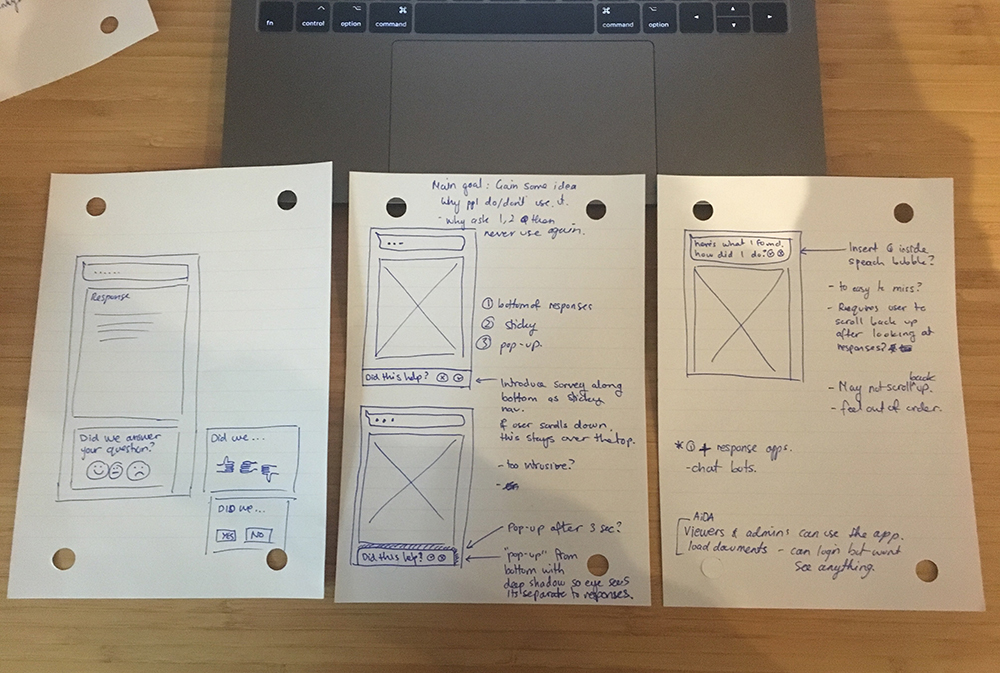

Low-fidelity sketched wireframes

Run a hallway test

- Assess validity of question phrasing

- UI/flow feedback

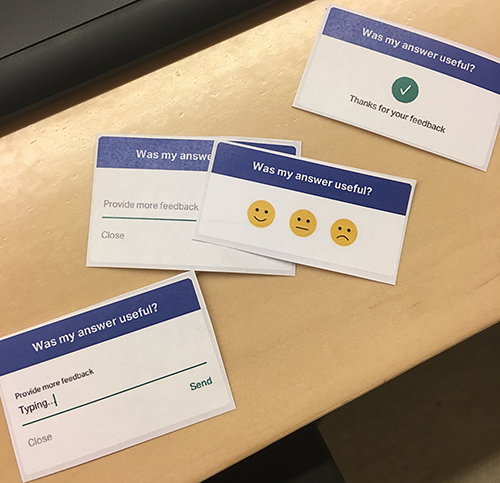

Test strategy

- Approach user, prompt them to ask AiDA a question using real app

Once a response is given, place a paper prototype over the phone showing the simple survey. Do the same for all simple survey actions - Observe how they go through the simple survey, asking them to verbalise their thoughts/decisions.

- If user is stuck, prompt them through:

- What would you do next? (if they don’t carry out any actions)

What would you say? (in the comment box)

- What would you do next? (if they don’t carry out any actions)

- Bring user’s attention back to the question. Without leading them, try to gauge how clear they felt the question was. Was it specific enough?

What were you thinking when you read the question? (at the end of the task) - Depending on the user’s feedback regarding the question, consider showing them alternatives:

- How useful was my answer?

- Was my answer accurate?

- How good was this answer from AiDA?

- Did I answer your question correctly?

Hallway test findings

Question related

- 3/4 people found the question clear, and knew how to respond

- In the case where the user 3 didn’t like the question, some question alternatives were presented based on their comments. Other options presented:

- Did I answer your question?

- How satisfied are you with my answer?

- In the end, they reverted back to the original question as being more to the point and open for their comments.

UI related

- Update text in the blue header as they click through the survey

- Even if AiDA doesn’t have an answer, her response should be more specific, not just ‘There are not matching results’

- e.g “0 people logged in today” “0 people are in the blue team”

Iterate/refine sketches

Share with wider AiDA team

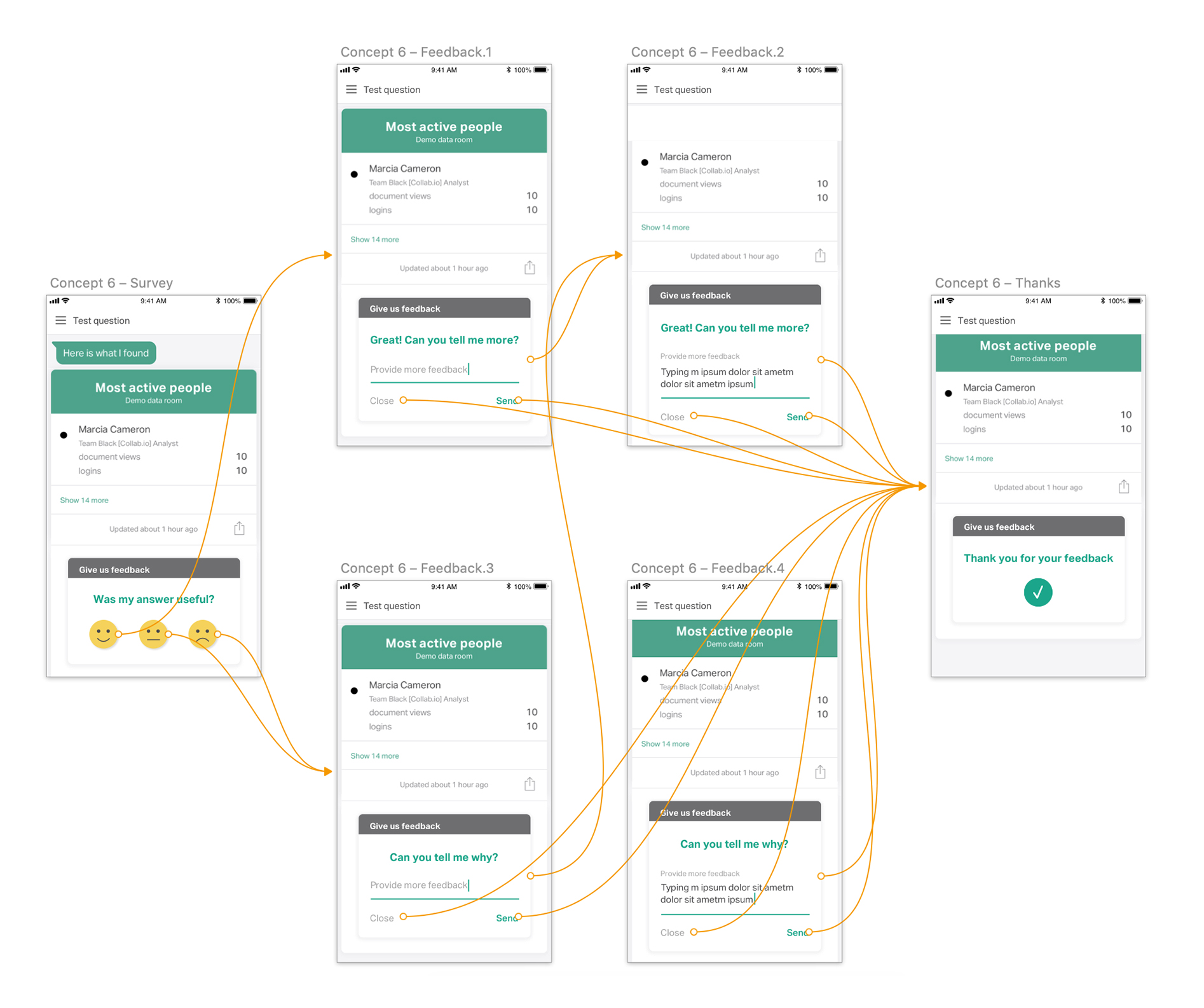

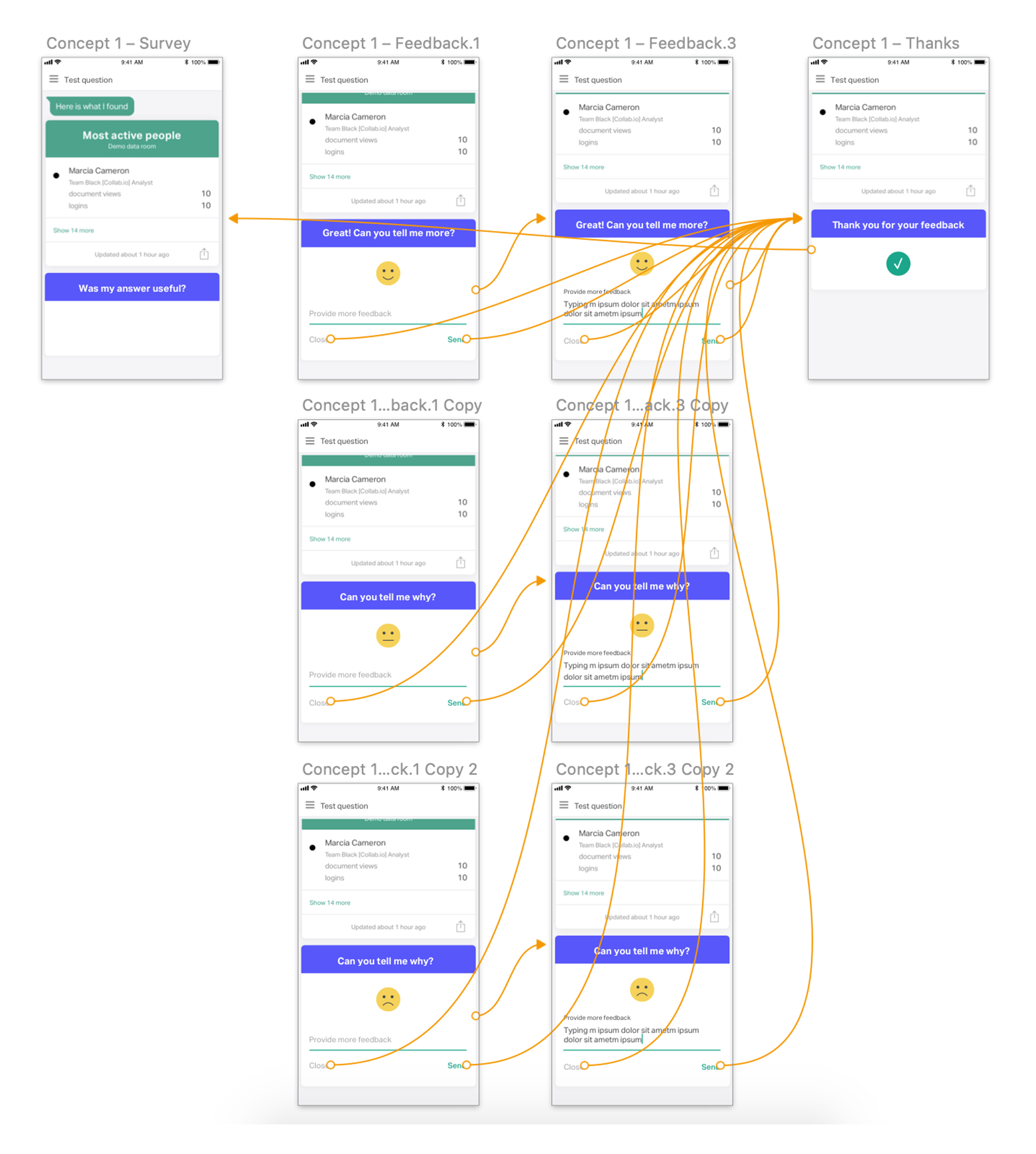

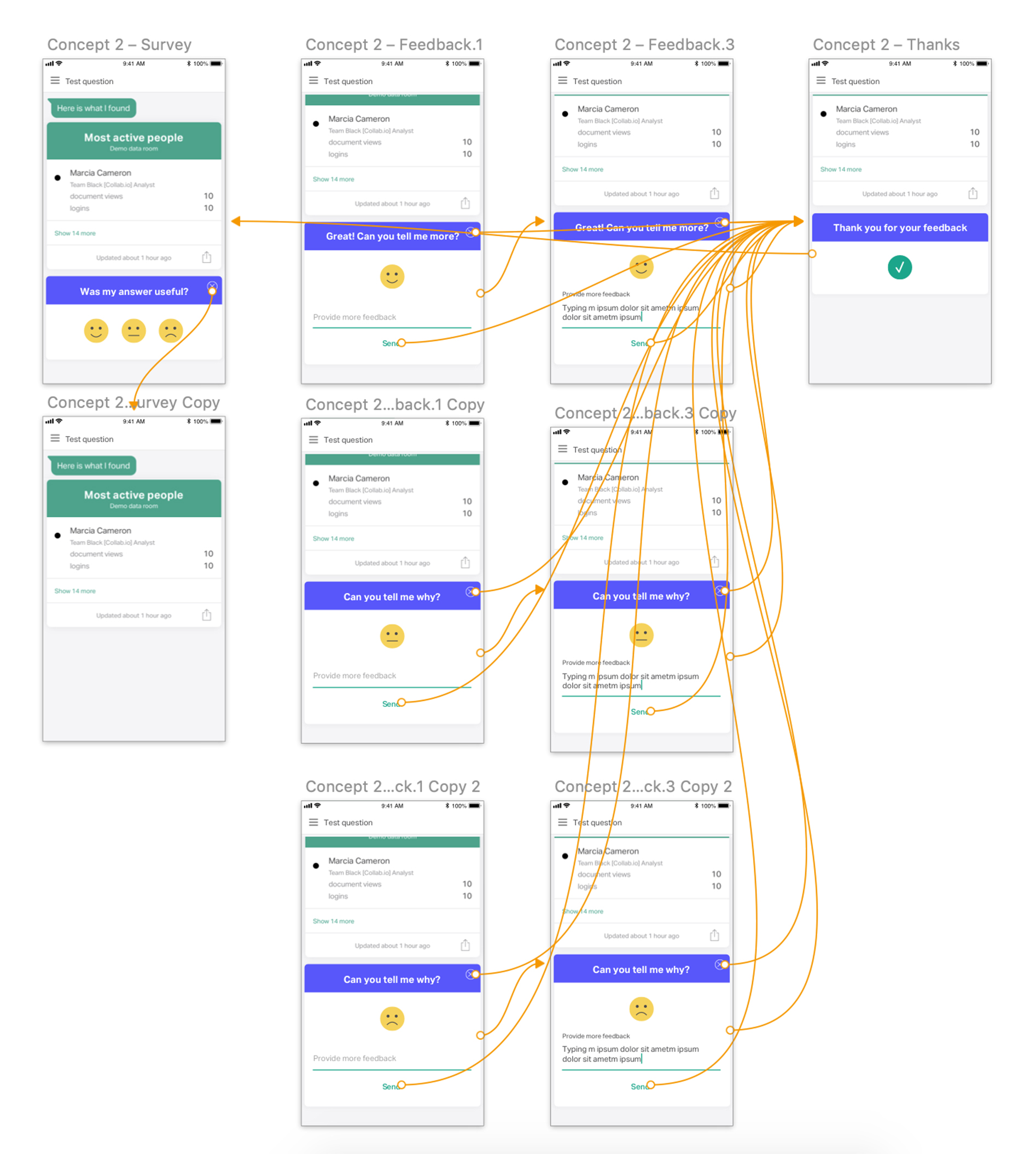

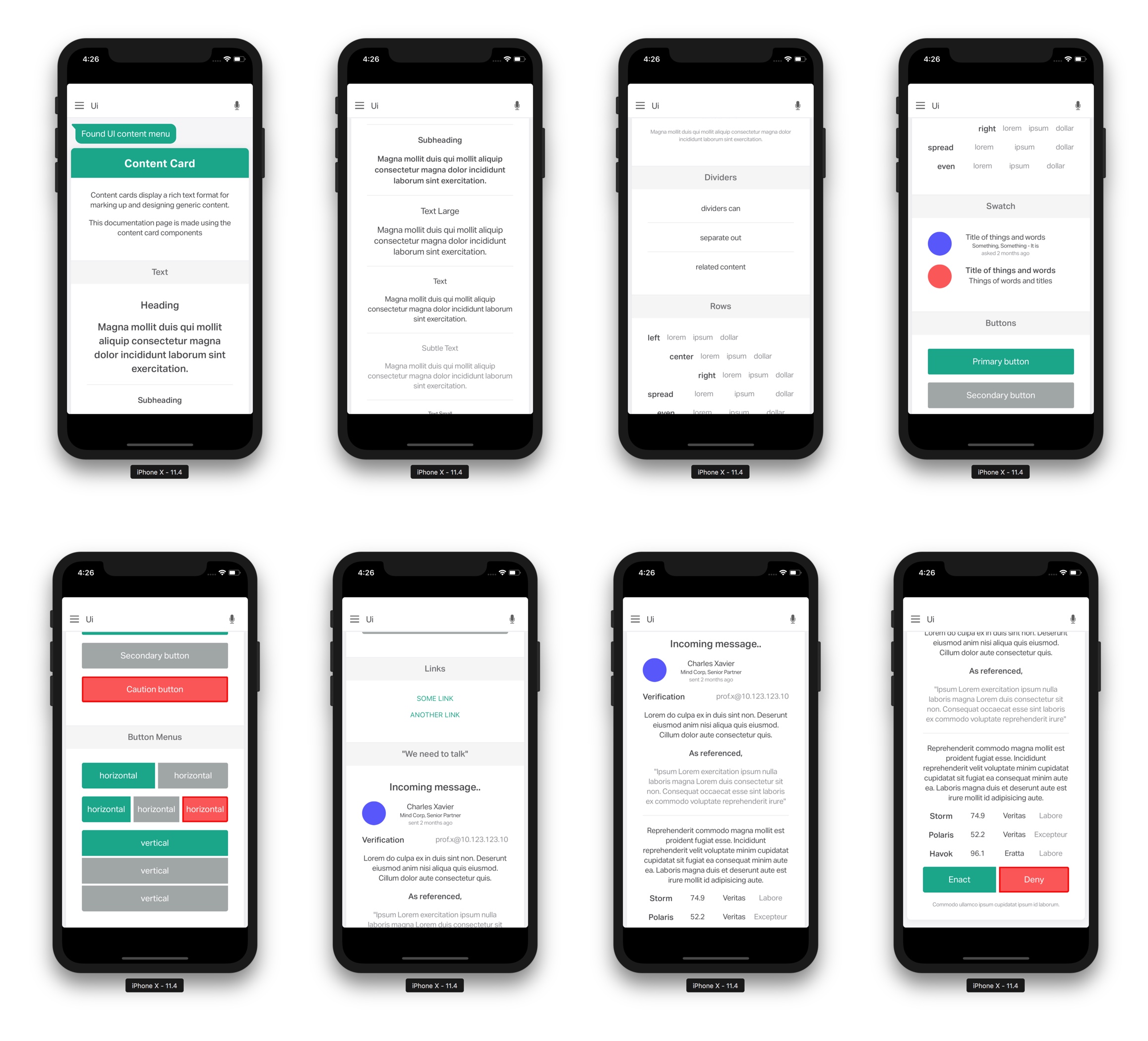

Prototype flow

High fidelity UI solutions using existing component library.

Gain feedback often from stakeholders

- If positioned at the bottom, how putting it at the bottom may be affected by a longer response taking up more screen realestate.

Considerations

- Keep it simple, don’t reinvent the wheel

- Have a long term goal in mind, however, for now focus on the simplest solution to get out fast in order to test, learn, iterate

Produce high-fidelity prototypes

Concept 3:

Using only existing components

Concept 4:

Removing the bold green bar at the top, and dropping the text into the white card – this is to put less emphasis on the survey, so not to take away or distract the user from the response

Concept 5:

Changing colour to grey, and inserting a consistent headline across all frames “Give us feedback”. Moving Question copy down, within the white area of the card.

Concept 6:

For consideration of a future iteration.

This concept is taking into consideration the future direction of AiDA; moving towards more of a continuous conversation chatbot. Rather than refreshing the screen each time a question is asked, the Q & A will just continuously follow on.

So, for this concept the survey has been moved up, to sit within the response card – not separate. So if another question is asked, the survey will just move up the screen, remaining attached to the original response.